Executive Summary

A well-known American department store chain offers a wide range of products including clothing, accessories, beauty products, home goods, and more. Amid the pandemic, the client shifted focus to their eCommerce channel, resulting in exponential growth.

Relying on third-party tools for data processing posed challenges with scale, speed, cost, and personalization. So, they embarked on developing an in-house ETL tool for greater control and customization. By adopting Google Big Query and leveraging cloud-based solutions, Factspan team improved data processing efficiency and achieved significant reductions in both query count and data processing execution time by around 50%.

Additionally, the data engineering teams experienced a 30% increase in productivity using a simple SQL programming language. The client estimates annual 20% cost savings by eliminating the need for external vendor support.

About the Client

The client company has a long history in the retail industry and is a well-known reputable brand in the US. The company operates at numerous locations across the United States and has an online store, providing customers with convenient shopping options. The client is recognized for its diverse product selection, competitive prices, and frequent sales events. Additionally, the company often collaborate with designers and brands to offer exclusive collections, further enhancing its appeal to shoppers

Business Challenge

During the pandemic, customers purchase behavior shifted from physical retail stores to online marketplaces, prompting the client to prioritize their eCommerce channel. This resulted in a surge in sales and rapid growth of their eCommerce website. With increased operations and a growing customer base, the need for efficient data processing became crucial.

The biggest challenge the client company faces is the reliance on third-party tools for data engineering processes. Moreover, the client company identified flaws, for e.g.: Limited scalability, Compatibility issues etc., in the custom third-party tools, which hindered their ability to make improvements based on each use case. They came up with a strategy to develop an inhouse tool that can replace all off-the-shelf solutions.

The company aimed to develop a proprietary ETL tool that offers full control over their infrastructure while minimizing costs. The upfront investment in building their own tool is seen as a more viable option compared to relying on custom tools from other vendors

Our Solution

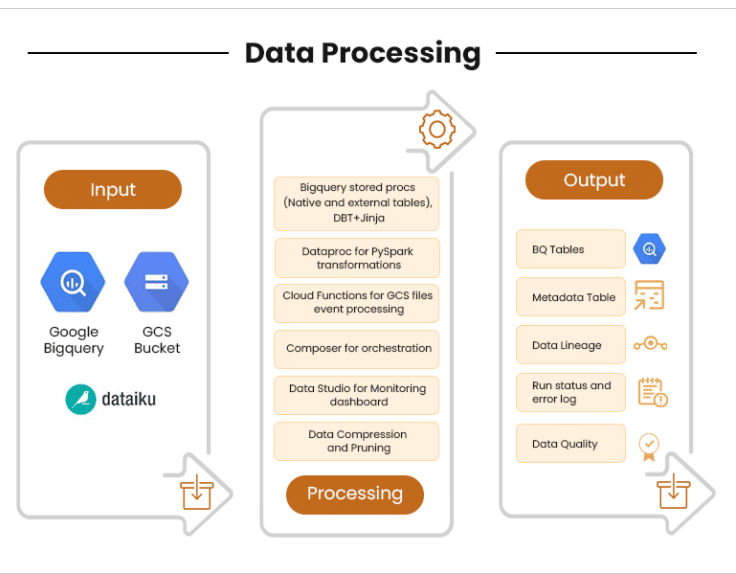

In building their in-house ETL tool, the client organization efficiently handled data ingestion and storage by adopting Google Big Query as their central repository. They streamlined the process using the cloud-based GCS Bucket as an intermediate data repository.

The data engineering team utilized Google Big Query’s capabilities, including stored procedures and native/external tables, along with DBT and Jinja templating for consistent data preparation. To tackle complex tasks and advanced analytics, they employed Dataproc for Py Spark transformations, leveraging Spark and Hadoop.

Workflow orchestration and monitoring were achieved through cloud functions and Google Cloud Composer. Data Studio facilitated intuitive monitoring dashboards for tracking key metrics, aiding the Merchant Ops team in decision-making.

Business Impact

- By having full control of the infrastructure, the client company eliminated the need for external vendor support, resulting in cost savings of approximately 20% per year.

- The implementation of the in-house ETL tool resulted in a significant reduction of 50% in the number of queries.

- The data processing execution time was cut in half, achieving a remarkable 50% reduction in time.

- By utilizing a simple SQL programming language, the data engineering teams experience a 30% increase in productivity.

Discover how our retail data solutions can improve scalability and cost-efficiency.

Featured content

Technical Challenges In Building An Ente...

Unified Workforce Data and Automated Ins...

Transforming Data Architecture for 360°...

Unified Merchandise Sales Intelligence t...

Transforming Merchandising Efficiency-Da...

Enhancing Theme Park Experience for Cust...

Analyzing Global Customer Acquisition Ra...

Top 3 Ways Netflix Uses Analytics to Kee...

4 Uses of AI in Netflix and Other Stream...