Why this blog?

Here is an essential read for anyone looking to enhance their data integration strategy. This blog introduces you to ELTV, an innovative approach that boosts data quality and governance. You’ll learn how ELTV can streamline your processes, improve decision-making, and provide practical tips for implementing it in your organization.

Every data practitioner is familiar with the long-standing debate centered around data integration methodologies: ETL (Extract, Transform, Load) versus ELT (Extract, Load, Transform). Both frameworks have proven their worth, each with distinct advantages tailored to different data environments and business needs. However, a new paradigm is emerging that promises to enhance the data integration landscape further: ELTV (Extract, Load, Transform, Validate). This innovative approach builds on the agility of ELT while incorporating a crucial layer of data validation to ensure higher quality and reliability in data analytics.

Introducing the ELTV Paradigm

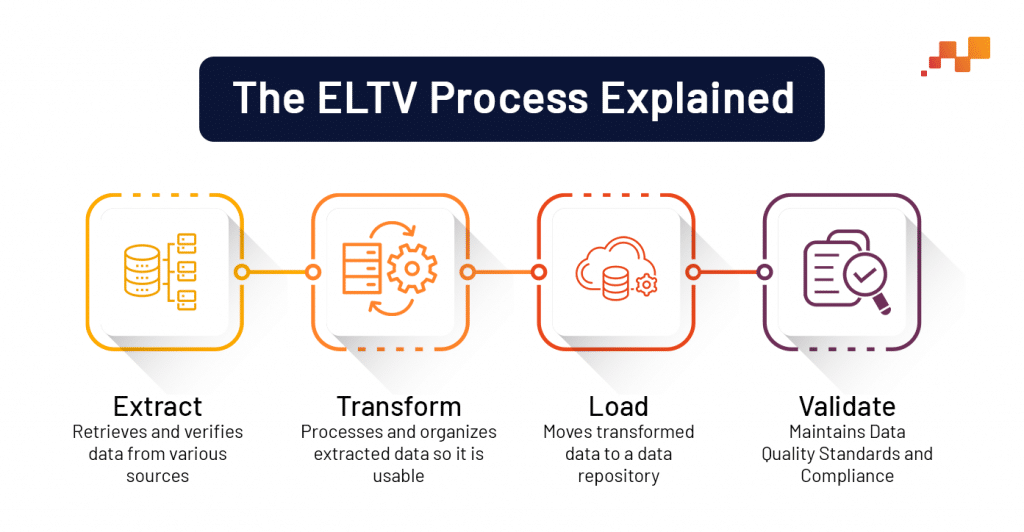

At its core, ELTV enhances the traditional ELT process by integrating a validation step, which acts as a gatekeeper to ensure only data that meets predefined quality standards and business rules are moved forward for analysis. The process begins with data being extracted from various source systems, followed by loading this raw data directly into a scalable and powerful data lake environment. This approach leverages the data lake’s processing power to handle vast amounts of data efficiently.

The transformation phase is flexible; data can be restructured and prepared based on current analytical needs without the sequential dependencies typical in ETL processes. The added validation step in ELTV thoroughly checks the transformed data against specific quality criteria and business rules before it is deemed fit for use.

The Power of Validation in ensuring Data Integrity

This validation step elevates ELTV beyond a simple ELT variant. Here’s how it empowers data integration:

- Enhanced Data Governance: By incorporating validation checks that align with predefined data quality standards and compliance requirements, ELTV significantly reduces the risk of errors propagating through downstream analytics processes. This proactive approach to data governance helps maintain the integrity and reliability of data throughout the lifecycle.

- Iterative Refinement: Validation in ELTV is not just a barrier but a diagnostic tool. It enables data engineers to identify and rectify data quality issues at their source, facilitating an iterative refinement of data quality. This ongoing process helps in fine-tuning the transformations and improving the overall quality of the data repository over time.

The Advantages of ELTV Lies in its Balancing Act

ELTV strikes a healthy balance between the strengths of both ETL and ELT:

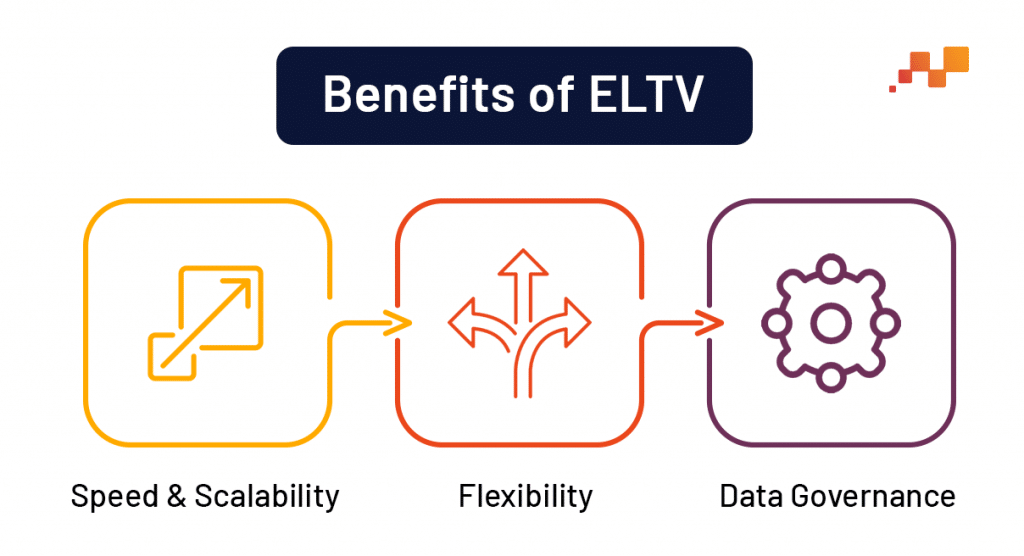

- Speed and Scalability: Like ELT, ELTV benefits from the ability to process large and complex datasets quickly by leveraging the parallel processing capabilities of modern data lakes.

- Flexibility: Given that transformations occur within the data lake, ELTV allows for on-the-fly adjustments and reconfigurations of data schemas to accommodate evolving business and analytical needs.

- Data Governance: The validation step extends the data governance capabilities within ELTV, ensuring high standards of data quality and compliance are met before data is used in decision-making processes.

What Should We Consider During ELTV Implementation?

While ELTV offers compelling benefits, careful planning is crucial:

- Complexity: The addition of a validation layer introduces complexity into the data integration process. Organizations must design and allocate adequate resources for managing this complexity to ensure seamless operation.

- Data Lineage & Quality Checks: It is crucial to maintain a clear lineage of data transformations and validations. This transparency helps in troubleshooting and validating data, ensuring that the data used in decision-making is accurate and trustworthy.

Streamlining ETL Pipeline with Snowflake, AWS, and PySpark

ELTVating Your Data Integration Strategy

As the landscape of data integration continues to evolve with technological advancements, ELTV positions itself as a potentially dominant model. By incorporating iterative quality improvements and robust data governance within its framework, ELTV offers a compelling strategy for organizations aiming to leverage their data assets effectively. However, the choice between ELTV, ELT, ETL, or a hybrid approach should be dictated by specific organizational needs and the nature of the data ecosystem.

In conclusion, while ELTV brings forth significant advantages, particularly in data governance and quality assurance, its implementation should be carefully planned and aligned with the broader data management strategy of the organization.