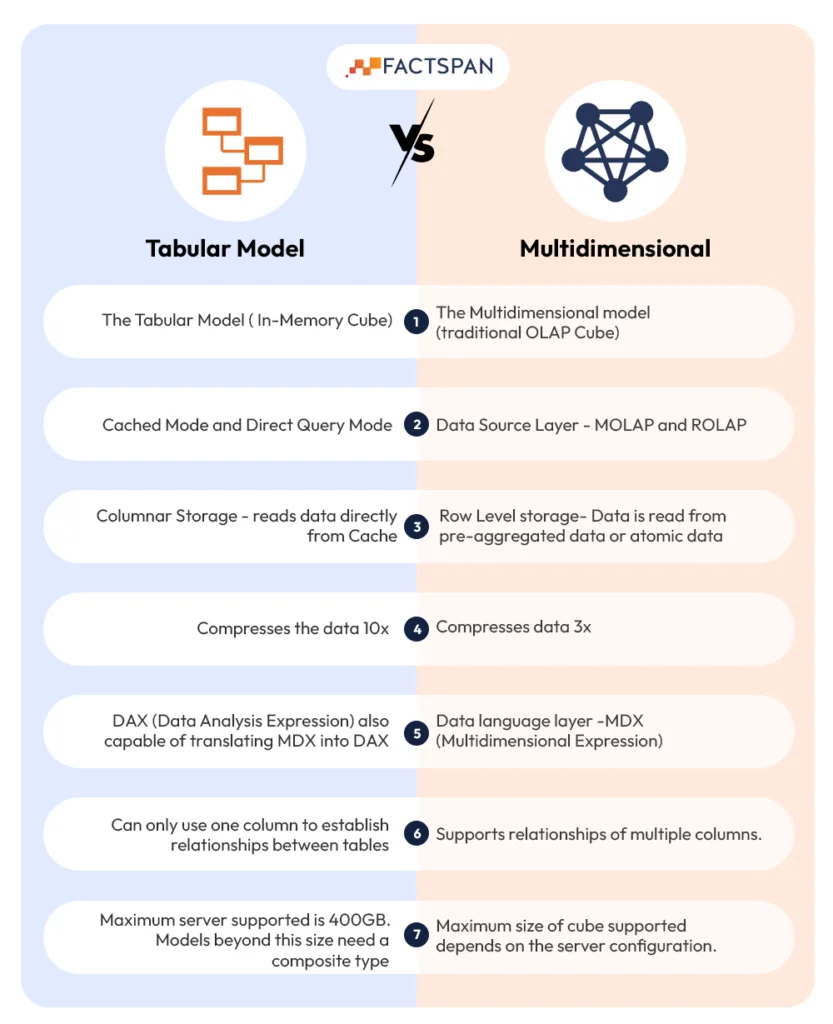

Multidimensional cube is a well-established technology based on open standards. It is widely adopted by various BI software vendors. However, its implementation can be difficult. Tabular technology, on the other hand, provides a relational modeling method that is considered more user-friendly by developers. Ultimately, tabular models are more manageable and require less effort to develop.

With a vast amount of data, the traditional SQL multidimensional model may not be able to keep up with the demands of businesses. For this very reason, more and more organizations are shifting to Azure Tabular Models to maximize their reporting performance.

In any business, analyzing and reporting data is crucial for decision making. But when it comes to large and complex data sets, traditional methods such as multidimensional cubes can become slow and inefficient. The queries can take a long time to extract data for reporting, resulting in a poor user experience. The way to solve this problem is by analyzing the existing multidimensional cube in SQL Server Analysis Services and converting it to a tabular model using Azure Analysis Services.

Exploring Inefficiencies in the Existing Multidimensional Cube Model

The SQL multidimensional cube currently used by many organizations is slow and leads to inefficient reporting. It requires complex queries to extract data from it. Power BI and Excel sheets often take more time than expected to refresh, and sometimes fail altogether. This problem is further compounded by the fact that user queries often time out.

Bridging the Gap Between Data Sources for Comprehensive Reports

To enhance the efficiency of data sources, the first step is to examine the cube\’s design and schema to determine whether it is optimized for reporting. We can also look at the query logs to see which queries are taking the most time and identify patterns in the data. This analysis will help us to understand the underlying issues and the areas that need improvement.

Converting to Azure Analysis Services Tabular Model

Once we have completed the analysis and identified the areas that need improvement, we can begin the process of converting the multidimensional cube to a tabular model. This involves creating a new tabular model using Azure Analysis Services and then migrating the data from the multidimensional cube to the new model.

The tabular model is a columnar database that is optimized for in-memory analytics. This means that it can handle large volumes of data and complex queries more efficiently than the multidimensional cube. It also has built-in compression and caching mechanisms that further improve performance.

The process can be carried out using tools such as SQL Server Data Tools for Visual Studio. The process involves mapping the multidimensional cube’s schema to the tabular model’s schema and then copying the data.

We can also optimize the data model during this process by eliminating unnecessary data and creating relationships between the tables. This will further improve the performance of the tabular model.

Deploying the Tabular Model

Once the data has been migrated, we can deploy the new Azure Analysis Services tabular model. This involves publishing the model to Azure Analysis Services and configuring it for use with reporting tools such as Power BI and Excel.

By deploying the new tabular model, we can provide faster and more efficient reporting for our users. The queries will run quickly, and users will no longer experience timeouts or slow refresh times.

Why choose Azure Tabular Models?

There are several reasons why organizations are choosing Azure Tabular Models over traditional multidimensional models. Here are a few:

Performance: Azure Tabular Models provide faster query response times compared to multidimensional models. They are designed to handle large amounts of data and provide real-time reporting capabilities.

Scalability: The models are highly scalable and can handle large amounts of data without compromising on performance. They can be easily scaled up or down depending on the needs of the organization.

Cost-effective: Businesses will find Azure Tabular Models cost-effective compared to traditional multidimensional models. They require less storage and maintenance costs, making them a more affordable option for organizations.

Streamline Your Reporting Processes to Maximize Your Data Insights

By leveraging the benefits of the tabular model, businesses can achieve a significant improvement in reporting performance. Migrating to the new model can lead to faster and more efficient reporting, resulting in better decision-making based on data. Studies have shown that converting to a tabular model can improve query performance by up to 10 times compared to a multidimensional cube. This is due to the in-memory analytics capability of the tabular model, which allows for faster data processing and retrieval.

With Azure Analysis Services, businesses can deploy their tabular models to the cloud and scale up or down based on their reporting needs. This provides flexibility and cost savings compared to on-premise solutions. By embracing the power of tabular models, companies can unlock the full potential of their data and achieve faster and efficient decision-making.

Also read: Databricks Vs Snowflake & How Forecast Analytics Decreases your Bounce Rate